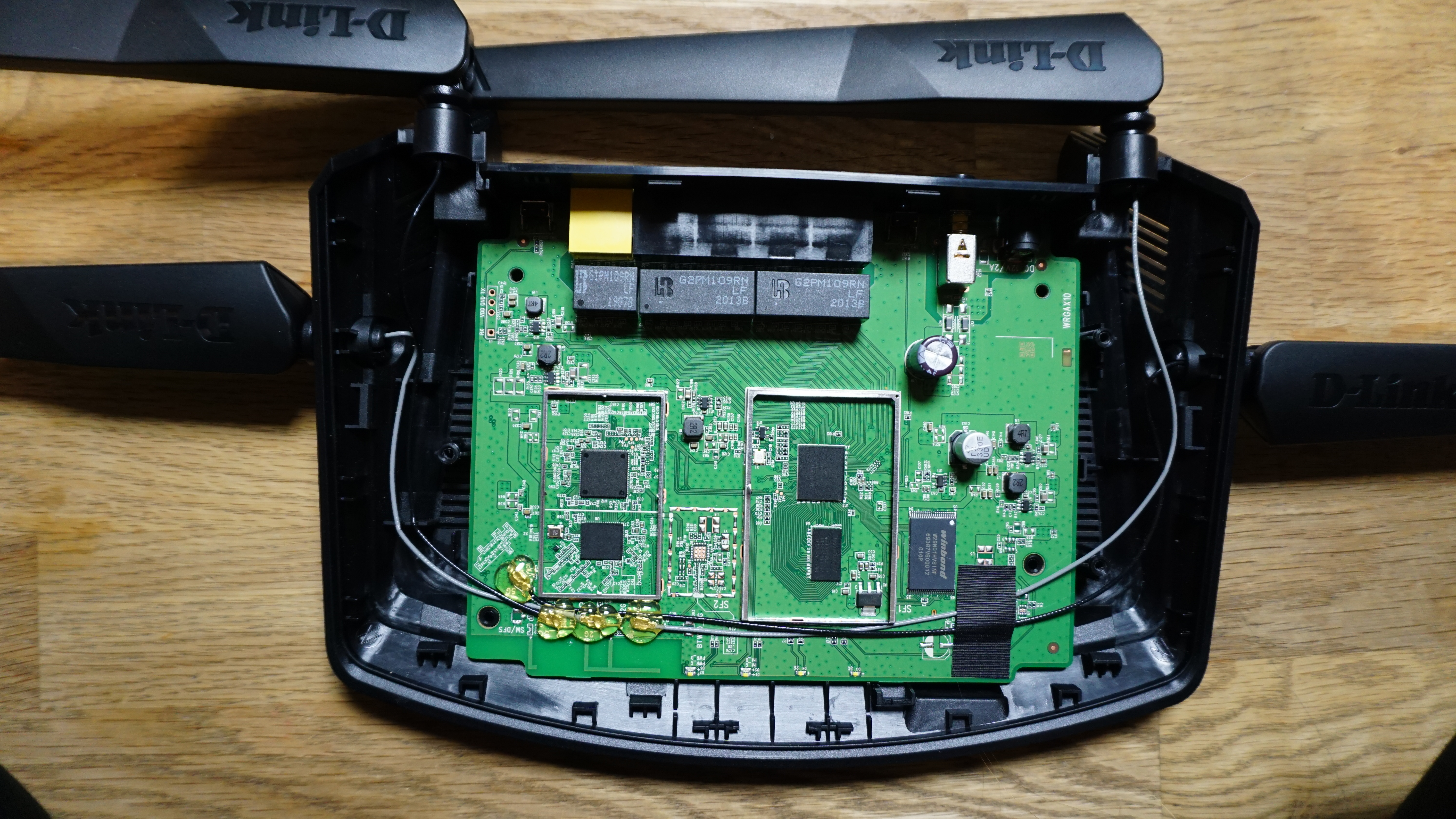

- CPU mediatek 7621AT - a ramips based cpu with 2 cores

- RAM: winbond w632... (too small to read ;)

- flash: 128 MByte NAND winbond w29n01hvsinf

- wifi: ax with a mt7915dan (2T2R 2.4 Ghz, 2T2R 5Ghz) + mt7975dn

I was asked to take a look on a supermicro server which has been damaged by an firmware update. It was an Supermicro X10DRW-IT. The firmware update was tried by USB storage, but somehow failed.

After powering the system, it went like this:

However it seems the IPMI is still booting through and won't be disconnected from the power as the remaining mainboard does, but the IPMI doesn't accept any bios update anymore.

As preparation I read up on the coreboot support for the Supermicro X10SLM+-F [0]

The bios chip is hidden under the raid controller if you've one.

First I've taken a look on the BIOS flash. To read the bios flash out, I've used a raspberry pi 3 with a SOIC-8 clip using the SPI bus.

Required tools:

How you have to connect the SPI SOIC chip is described in [2]. Pin 1 of the SPI chip is where the small hole is on the.

Ensure you Disconnect both power supplies from the mainboard.

sudo flashrom -p linux_spi:dev=/dev/spidev0.0,spispeed=1000 -r bios sudo flashrom -p linux_spi:dev=/dev/spidev0.0,spispeed=1000 -r bios2 sha256sum bios bios2 # ensure the checksum is equal, to ensure you read real things. strings -n 16 bios # try to get some strings out of it, ensure you not only read 0xffff or 0x0000.

Next I downloaded the supermicro bios update. Here you can find:

tree . . ├── DOS │ ├── AFUDOSU.SMC │ ├── CHOICE.SMC │ ├── FDT.smc │ ├── FLASH.BAT │ ├── Readme for X10 AMI BIOS-DOS+UEFI.txt │ └── X10DRW9.B22 └── UEFI ├── Readme for X10 AMI BIOS-DOS+UEFI.txt ├── X10DRW9.B22 ├── afuefi.smc ├── fdt.smc └── flash.nsh ls -al X10DRW9.B22 -rw------- 1 lynxis users 16777216 Nov 22 16:07 X10DRW9.B22

Sound good, it's size is exact 16 MB, the same size as the bios flash. file also tell me what it is.

file X10DRW9.B22

X10DRW9.B22: Intel serial flash for PCH ROM

Great we found a firmware image with ifd (intel firmware descriptor). Now I've looked on the BIOS backup we read with the raspberry pi.

I used hexdump -C bios to see if the end contains a lot of 1s (or 0xffff in hex). Why? Because if you want to write a SPI flash, you can not just write to it like a hard drive. SPI flash chips are organised in blocks. A block is usally 64 kbyte. A single bit on a flash chip can only be written to a 0. If you want to write a single bit with a 1 where a 0 was before (0 -> 1), you've to erase the whole block, not only the address. An erase blocked is full of 1. To find out, if we have a half written flash, we can try to look on the end of the flash if there are a lot of 1s (or 0xffffffff).

hexdump -C is showing it quite nice

00c2ee20 4d 50 44 54 00 01 00 00 10 00 00 00 00 00 10 00 |MPDT............| 00c2ee30 ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff |................| * 01000000

This means, it only written up to 0x00c2ee30 (12.2 MB). Now we can look into the downloaded image, if it looks similiar. Maybe here starts configuration data. But no, it's missing some data here.

Next task is to flash the bios section. Bios section? The intel firmware description contains section. Similiar to a partition layout on a hard drive. On this platform there are 3 different sections

00000000:00000fff fd 00400000:00ffffff bios 00011000:003fffff me

To flash only the partition you either have to use a recent flashrom version (at least 1.0) or you've to extract the layout file using the ifdtool (from coreboot). You can also use the last code snipped as layout.

flashrom -p linux_spi:dev=/dev/spidev0.0,spispeed=1000 -l layout -i bios -w X10DRW9.B22

It verifies it after writing to it. But still it doesn't work. My next thought was maybe the IPMI flash got damaged as well. The SPI flash of the IPMI is close by. So let's have a look. I was lucky to also have a SOIC-16 test clip available. I did the same procedure on the IPMI flash. However the flashrom in raspian was too old. The flash chip wasn't known to this version flashrom. I had to compile it myself.

sudo apt install git build-essential git clone https://review.coreboot.org/flashrom.git cd flashrom make CONFIG_ENABLE_LIBPCI_PROGRAMMERS=no CONFIG_ENABLE_LIBUSB1_PROGRAMMERS=no ./flashrom -p linux_spi:dev=/dev/spidev0.0,spispeed=1000 -r ipmi

But it looks good so far. Running binwalk on it shows us

binwalk ipmi DECIMAL HEXADECIMAL DESCRIPTION -------------------------------------------------------------------------------- 103328 0x193A0 CRC32 polynomial table, little endian 1048576 0x100000 JFFS2 filesystem, little endian 4194304 0x400000 CramFS filesystem, little endian, size: 15216640, version 2, sorted_dirs, CRC 0xB1031FF3, edition 0, 8613 blocks, 1099 files 20971520 0x1400000 uImage header, header size: 64 bytes, header CRC: 0x3F1E0DA5, created: 2019-11-15 08:36:11, image size: 1537512 bytes, Data Address: 0x40008000, Entry Point: 0x40008000, data CRC: 0x310498CA, OS: Linux, CPU: ARM, image type: OS Kernel Image, compression type: gzip, image name: "21400000" 20971584 0x1400040 gzip compressed data, maximum compression, has original file name: "linux.bin", from Unix, last modified: 2019-11-15 07:25:15 24117248 0x1700000 CramFS filesystem, little endian, size: 7458816, version 2, sorted_dirs, CRC 0xF5B9463B, edition 0, 3108 blocks, 466 files

Looks also good, however I want to be sure, it's the fine. I did first a backup, second overwritten with a file from the IPMI firmware update.

Still no change.

So what's wrong here? Is the power management controller damaged? The power supply are controller digital via I2C. Maybe it's somehow telling me something is wrong?

I was lucky, I didn't had a i2c sniffer around, otherwise I would have digged into it. I nearly gave it up, before I found out, that the backup file didn't worked with ifdtool. I exported the layout using the firmware update file, and not with the backup file. Usually firmware updates do not touch the ifd. It seems server boards are different. So the backup didn't contained an ifd. It wasn't only damaged in the end, also in the beginning. Not sure if this is a safety feature of the update. It might ensure at the beginning of an update the partial flash wouldn't be recognized as a working image. It's not a good thing booting a half working image.

I flashed the bios firmware update image and the board is back. To be sure, I flashed the ipmi backup on the SPI chip.

TLDR; So the fast way to recover a partial bios, do a backup first! Then flash the full image. At least for this generation it works.

Note: Depending on your specific hardware setup (cable length, test clip) you can increase or decrease the spispeed. spispeed=10000 => 10 MHz should be still ok. You'll notice the wrong spispeed if the reading or flashing fails.

[0] https://doc.coreboot.org/mainboard/supermicro/x10slm-f.html

[1] https://www.pomonaelectronics.com/products/test-clips/soic-clip-8-pin

[2] https://github.com/bibanon/Coreboot-ThinkPads/wiki/Hardware-Flashing-with-Raspberry-Pi

Similiar to lolamby' regular post on his free software contribution, I want to start a similiar post.

The big chunk of the December I prepared together with the GSM team the celluar network at the 36c3 (36. Chaos Communication Congress). Every year we're building our own celluar network using free software project osmocom & open5gs. Osmocom is a community project around mobile communication. We used osmocom to run our core network (CN) of our 2G and 3G network. Open5gs is our LTE CN, which was interconnected to the osmocom CN. Here is a overview, every buble is an own daemon.

The 36c3 was a nice testing ground. We had to extend a couple of the services (e.g. osmomsc, osmogsup2dia, osmohnbgw). While the event we also like to enjoy the congress, so this is our excuse to not upstream our patches right a away. However we push our branches as it is to https://git.osmocom.org. Upstreaming will be more seen in January.

Last week I visited thomasdotwtf from eventphone who has a jura coffee machine. We took one evening to look into it, how easy it is, to use a generic BLE device like a raspberry pi to control it. He has a Jura Z8 Automatic Coffee Machine which supports an IOS/Android app via Bluetooh LE.

Jura released (at least) two different apps to control it.

Both apps supports ordering, changing properties of a coffee (e.g. how much water do you want to have or how much coffee should be in there?). mkssystems.pl seems to went out-of-service, but the internet archive still have an old version and they show a lot of coffee machine related products. As well as a small blue device [1].

This is the BlueFrog a bluetooth dongle to control Jura coffee machines.

- bluetooth packettrace: We used the android btsnoop.log to retrieve a packet trace which we loaded into wireshark.

- decompiled with different tools the .apk

- loaded the source code into android studio

The J.O.E. application is using XML files to be configured for the different coffee machines. The XML defines products (e.g. a coffee, a green tea, ...), there are properties (e.g. how much coffee should be produced), statistics and settings. The article number defines the XML file to be used.

A firmware process including the update urls and the new firmware.

We tried to find the same commands which should work on the RS232/serial in the bluetooth packet trace, but there wasn't any. After looking further in the code, we found a lot UUIDs for characteristics including a human readable name. We discovered also an "encryption" method which uses 2x hardcoded keys as well an additional input of 8 bit from the BLE advertisment. The encryption look like a static key.

In the BLE advertisment, there are manufactoring data. In our case, the manufactoring data contains 27 bytes. If 16 bit will be used, it's little endian.

manufactoring data as hex (27 byte): aa 05 06 03 d73a yyyy xxxx 5836 4435 01 c0 00 00 00 00 00 00 00 00 00 00 00 aa: key 05: BlueFrog Major Version 06: BlueFrog Minor Version 03: unused (maybe Patch Version?) d73a: article number (the specific type of the machine) yyyy: machine number xxxx: serial number 5836: production date (Feb. 2017) 4435: production date UHCI (does UHCI means the bluefrog?) (Okt. 2016) 01: unused c0: bitmask, define supported features

The production dates can be decoded and also validated using the application where it's shown in the connection fragment:

days: (i & 31) month: ((i & 480) >> 5) year: ((i & 65024) >> 9) + 1990;

Write a decrypt function which can parse pcap files and shows the message or write a dissector (lua) for wireshark with decryption function.

Find out how to map the XML files into commands towards the BlueFrog.

The good thing of BLE is, it's standarzied in the communication. BLE uses Bluetooth Attribute Protocol to communicate. The Bluetooth Attribute Protocol uses services and characteristics. A service is an object which can hold multiple characteristics. A characteristic can support one or more of the following operations read, write, notification, indication. Every service has a UUID as well a characteristic has a UUID. The Bluetooth Attribute Protocol has it's own methods to discover avaiable services and characterics. For more information please take a closer look into Bluetooth Low Energy.

As a general BLE device, the BlueFrog annouce itself on the BLE.

> hcitool lescan

LE Scan ...

C9:26:E8:4B:72:02 TT214H BlueFrog

> HCI Event: LE Meta Event (0x3e) plen 43 #8 [hci0] 8.466202

LE Advertising Report (0x02)

Num reports: 1

Event type: Scan response - SCAN_RSP (0x04)

Address type: Random (0x01)

Address: C9:26:E8:4B:72:02 (Static)

Data length: 31

Company: Ingenieur-Systemgruppe Zahn GmbH (171)

Data: aa050603d73a080402005836443501c00000000000000000000000

RSSI: -78 dBm (0xb2)

And further more we can also look for the services and characteristics via the gatttool.

> gatttool -b C9:26:E8:4B:72:02 --services -t random attr handle = 0x0001, end grp handle = 0x0007 uuid: 00001800-0000-1000-8000-00805f9b34fb attr handle = 0x0008, end grp handle = 0x0008 uuid: 00001801-0000-1000-8000-00805f9b34fb attr handle = 0x0009, end grp handle = 0x0033 uuid: 5a401523-ab2e-2548-c435-08c300000710 attr handle = 0x0034, end grp handle = 0x003a uuid: 5a401623-ab2e-2548-c435-08c300000710 attr handle = 0x003b, end grp handle = 0xffff uuid: 00001530-1212-efde-1523-785feabcd123

> gatttool -b C9:26:E8:4B:72:02 --characteristics -t random handle = 0x0002, char properties = 0x0a, char value handle = 0x0003, uuid = 00002a00-0000-1000-8000-00805f9b34fb handle = 0x0004, char properties = 0x02, char value handle = 0x0005, uuid = 00002a01-0000-1000-8000-00805f9b34fb handle = 0x0006, char properties = 0x02, char value handle = 0x0007, uuid = 00002a04-0000-1000-8000-00805f9b34fb handle = 0x000a, char properties = 0x02, char value handle = 0x000b, uuid = 5a401524-ab2e-2548-c435-08c300000710 handle = 0x000d, char properties = 0x08, char value handle = 0x000e, uuid = 5a401525-ab2e-2548-c435-08c300000710 handle = 0x0010, char properties = 0x08, char value handle = 0x0011, uuid = 5a401529-ab2e-2548-c435-08c300000710 handle = 0x0013, char properties = 0x08, char value handle = 0x0014, uuid = 5a401528-ab2e-2548-c435-08c300000710 handle = 0x0016, char properties = 0x0a, char value handle = 0x0017, uuid = 5a401530-ab2e-2548-c435-08c300000710 handle = 0x0019, char properties = 0x02, char value handle = 0x001a, uuid = 5a401527-ab2e-2548-c435-08c300000710 handle = 0x001c, char properties = 0x02, char value handle = 0x001d, uuid = 5a401531-ab2e-2548-c435-08c300000710 handle = 0x001f, char properties = 0x0a, char value handle = 0x0020, uuid = 5a401532-ab2e-2548-c435-08c300000710 handle = 0x0022, char properties = 0x0a, char value handle = 0x0023, uuid = 5a401535-ab2e-2548-c435-08c300000710 handle = 0x0025, char properties = 0x0a, char value handle = 0x0026, uuid = 5a401533-ab2e-2548-c435-08c300000710 handle = 0x0028, char properties = 0x02, char value handle = 0x0029, uuid = 5a401534-ab2e-2548-c435-08c300000710 handle = 0x002b, char properties = 0x02, char value handle = 0x002c, uuid = 5a401536-ab2e-2548-c435-08c300000710 handle = 0x002e, char properties = 0x02, char value handle = 0x002f, uuid = 5a401537-ab2e-2548-c435-08c300000710 handle = 0x0031, char properties = 0x02, char value handle = 0x0032, uuid = 5a401538-ab2e-2548-c435-08c300000710 handle = 0x0035, char properties = 0x02, char value handle = 0x0036, uuid = 5a401624-ab2e-2548-c435-08c300000710 handle = 0x0038, char properties = 0x08, char value handle = 0x0039, uuid = 5a401625-ab2e-2548-c435-08c300000710 handle = 0x003c, char properties = 0x04, char value handle = 0x003d, uuid = 00001532-1212-efde-1523-785feabcd123 handle = 0x003e, char properties = 0x18, char value handle = 0x003f, uuid = 00001531-1212-efde-1523-785feabcd123

| start | end | uuid | name | |

|---|---|---|---|---|

| 0x0001 | 0x0007 | 0x1800 | Generic Access Profile | |

| 0x0008 | 0x0008 | 0x1801 | Generic Attribute Profile | |

| 0x0009 | 0x0033 | 5a401523-ab2e-2548-c435-08c300000710 | ||

| 0x0034 | 0x003a | 5a401623-ab2e-2548-c435-08c300000710 | ||

| 0X003b | 0xffff | 00001530-1212-efde-1523-785feabcd123 |

| handle | value handle | properties | uuid | description |

|---|---|---|---|---|

| 0x0002 | 0x0003 | RW (0xa) | 00002a00-0000-1000-8000-00805f9b34fb | |

| 0x0004 | 0x0005 | R (0x2) | 00002a01-0000-1000-8000-00805f9b34fb | |

| 0x0006 | 0x0007 | R (0x2) | 00002a04-0000-1000-8000-00805f9b34fb |

| handle | value handle | properties | uuid | description |

|---|---|---|---|---|

| 0x000a | 0x000b | R (0x2) | 5a401524-ab2e-2548-c435-08c300000710 | Machine Status |

| 0x000d | 0x000e | W (0x8) | 5a401525-ab2e-2548-c435-08c300000710 | Product Start |

| 0x0010 | 0x0011 | W (0x8) | 5a401529-ab2e-2548-c435-08c300000710 | Service Control |

| 0x0013 | 0x0014 | W (0x8) | 5a401528-ab2e-2548-c435-08c300000710 | Update Product Progress |

| 0x0016 | 0x0017 | RW (0xa) | 5a401530-ab2e-2548-c435-08c300000710 | Product Progress |

| 0x0019 | 0x001a | R (0x2) | 5a401527-ab2e-2548-c435-08c300000710 | About |

| 0x001c | 0x001d | R (0x2) | 5a401531-ab2e-2548-c435-08c300000710 | |

| 0x001f | 0x0020 | RW (0xa) | 5a401532-ab2e-2548-c435-08c300000710 | |

| 0x0022 | 0x0023 | RW (0xa) | 5a401535-ab2e-2548-c435-08c300000710 | |

| 0x0025 | 0x0026 | RW (0xa) | 5a401533-ab2e-2548-c435-08c300000710 | Statistics command |

| 0x0028 | 0x0029 | R (0x2) | 5a401534-ab2e-2548-c435-08c300000710 | Statistics data |

| 0x002b | 0x002c | R (0x2) | 5a401536-ab2e-2548-c435-08c300000710 | |

| 0x002e | 0x002f | R (0x2) | 5a401537-ab2e-2548-c435-08c300000710 | |

| 0x0031 | 0x0032 | R (0x2) | 5a401538-ab2e-2548-c435-08c300000710 | Service Control Response |

| handle | value handle | properties | uuid | description |

|---|---|---|---|---|

| 0x0035 | 0x0036 | R (0x2) | 5a401624-ab2e-2548-c435-08c300000710 | |

| 0x0038 | 0x0039 | W (0x8) | 5a401625-ab2e-2548-c435-08c300000710 |

| handle | value handle | properties | uuid | description |

|---|---|---|---|---|

| 0x003c | 0x003d | W- (0x4) | 00001532-1212-efde-1523-785feabcd123 | Nordic DFU_PACKET_CHARACTERISTI |

| 0x003e | 0x003f | W N (0x18) | 00001531-1212-efde-1523-785feabcd123 | Nordic DFU_CONTROL_POINT_CHARACTERISTIC |

I joined the MirageOS retreat in March 2019. It's an 1 week event in Marrakech, Marocco. It's a real nice house in the old city of Marrakech, the medina. The event itself doesn't have much structure than a morning meeting and sometimes talks in the evening.

MirageOS is unikernel written in OCaml. MirageOS can run ontop of many backends, e.g. Unix process or xen, kvm, bhyve. This retreat I took care of the Internet uplink. We had a slow and leaky 4MBit ADSL line from Marocco Telecom which we used as backup, while using LTE as main uplink. We used first imwi as provider. But imwi changes the IPs quites often and the implementation in OpenWrt uqmi does not follow the IP changes, which resulted in a stale LTE connection. Imwi is also filtering all UDP DNS queries, except those going to their own servers. We then switched to Orange as provider, because someone had a card available. Orange was fast enough, pretty stable 5MBit up&down. We consumed roughly 20 GB a day. This brought us the nice daily ritual, a walk to a small and nice mobile shop in the medina. 1 GB cost 10 Dh (1 Euro). Our router, an APU2, runs OpenWrt, but we disabled DNS & DHCP and ran these services on a seperate APU using MirageOS.

Even I'm not such familiar with OCaml and functional languages, I tried to fix a bug in the DHCP Server implementation PR#97. It worked for me, however after deploying it, it turned out, it only worked for me, I broke it for everybody else ;). This motivated me to start looking on TTCN-3, a ETSI language to test network protocols. Later together with Hannes, we fixed the DHCP for real. Adding some TTCN-3 tests and create a simple base is still on my TODO. Another really nice OCaml service on side was a learn-ocaml instance. An interactive teaching web application for beginners and advances OCaml programmers including an annotate OCaml compiler. Sadly there is no instance in the internet yet, as the projects is not ready for release.

While there I also worked a lot on reproducible builds for OpenWrt. I fixed 2 packages. All OpenWrt base packages are 100 % reproducible. Thanks to Daniel Golle, OpenWrt images can be cryptographically signed. This signature must be removed before looking for differences, this is also done in the reproducible builds setup for OpenWrt. 100% of ar71xx images are reproducible and 98% of ramips. The remaining 2% are also signature problems, but these signatures are in the middle instead of the end of the image. I also found the time to integrate my package index parser into reproducible builds. It's much easier to just parse two packages list, than looking on the all package files to determine if they are reproducible or not. The package index files also contain metadata of the packages which it inserts into the reproducible builds database.

Some people from the QubesOS projects joined the retreat. For example there is a MirageOS firewall which replaces the QubesOS own one. There is also a Pong game, which can run as QubesOS-vm. Thanks to the QubesOS people for their help on my problems with disposable vms.

Furthermore I brought a beaglebone black with me to investigate bugs reported for that platform. While looking at it, I found out the last release of OpenWrt (18.06.2) doesn't work on this board (fs: squashfs), while master works. I also fixed builds issues with u-boot in OpenWrt for the beaglebone black when using a modern toolchain.

Since we used LTE as uplink, we wanted to know how much of our data volume was consumed. OpenWrt might have statistics, but those are stored only in memory and not saved anywhere. I didn't looked for any OpenWrt packages which fixes this problem, because the provider (Orange) is supporting a USSD code to retrieve the remaining volume.

What is USSD? USSD stands for Unstructured Supplementary Service Data. It's used on mobile phones to retrieve balance, your phone number, your IMEI, [..]. Most people have used them. Take your phone, open the phone application and call *#06#, it will return your phone unique identifier (IMEI). While SMS is a store-and-forward scheme, like email. USSD is real time message protocol, similiar to a TCP connection. The USSD codes are simple, do a request, get a response. Done. But Orange implemented a menu via USSD. So the USSD session will look like: Request, Response, Choose Your Menu, Response, Go Back, Choose different Point. I've started writing USSD support for libqmi. Simple USSD codes can be requested and decoded, but not menus with user input.

And the biggest problem is: OpenWrt doesn't support USSD at all. Not even the simple ones.

Sometimes, when I'm not directly around or I forgot to put the powersupply into my laptop. My laptop runs into the critical power action. Because I'm using upowerd, my machine try to does this:

Great! My machine shuts down, in the middle of doing something. It would take 2 minutes to get a powersupply, but too late!!

But there might be a solution for this: Suspend. My machine can survive more than 1 hour in suspend with this low battery.

It would help me NOT loosing my current unsaved work.

After looking into the upowerd, it's just a 1 line code change to allow this. It is not a good default, but there are people who like to use this.

But .. upowerd doesn't like. They not even want to allow the user to take this option. Indepentent that I agree, this shouldn't be the default. We're discussing this issue for years. Without any solution. Upowerd want to decide what users should do with there laptop and what not.

How to resolve it?

From time to time you need to test things with the old image. But how do you test thing when the original build environment is lost and you want to test sysuprade against this old release (actually 12.09).

First you've to create a flashdump of the firmware paritition.

# grep firmware /proc/mtd mtd5: 003d0000 00010000 "firmware" # ssh root@192.168.1.1 dd if=/dev/mtd5 > /tmp/firmware_backup

Afterwards you can use binwalk to get the actual offsets of the different parts inside.

# binwalk /tmp/firmware_backup DECIMAL HEXADECIMAL DESCRIPTION -------------------------------------------------------------------------------- 512 0x200 LZMA compressed data, properties: 0x6D, dictionary size: 8388608 bytes, uncompressed size: 2813832 bytes 930352 0xE3230 Squashfs filesystem, little endian, version 4.0, compression:xz, size: 2194094 bytes, 728 inodes, blocksize: 262144 bytes, created: 2014-03-05 14:58:48 3145728 0x300000 JFFS2 filesystem, big endian

So sysupgrade images for ar71xx is still using the (old) layout of

---------- |KERNEL | ---------- |squashfs| ---------- |jffs2 | ----------

While a sysupgrade image contains for those platforms:

-------------- |KERNEL | -------------- |squashfs | -------------- |jffs2-dummy | --------------

So we will split-off the jffs2 Part and replace it with jffs2.

# dd if=/tmp/firwmare_backup bs=3145728 count=1 of=/tmp/sysupgrade.img

Next we add this jffs2-dummy by using the same tool LEDE is using it:

# /home/lynxis/lede/staging_dir/host/bin/padjffs2 /tmp/sysupgrade.img 64

The 64 means the padding size in kb. It's important to choose the right one, but for most devices this is 64k at least for ar71xx.

ssh root@192.168.1.1 dd if=/dev/mtd5 > /tmp/firmware_backup binwalk /tmp/firmware_backup dd if=/tmp/firwmare_backup bs=3145728 count=1 of=/tmp/sysupgrade.img /home/lynxis/lede/staging_dir/host/bin/padjffs2 /tmp/sysupgrade.img 64

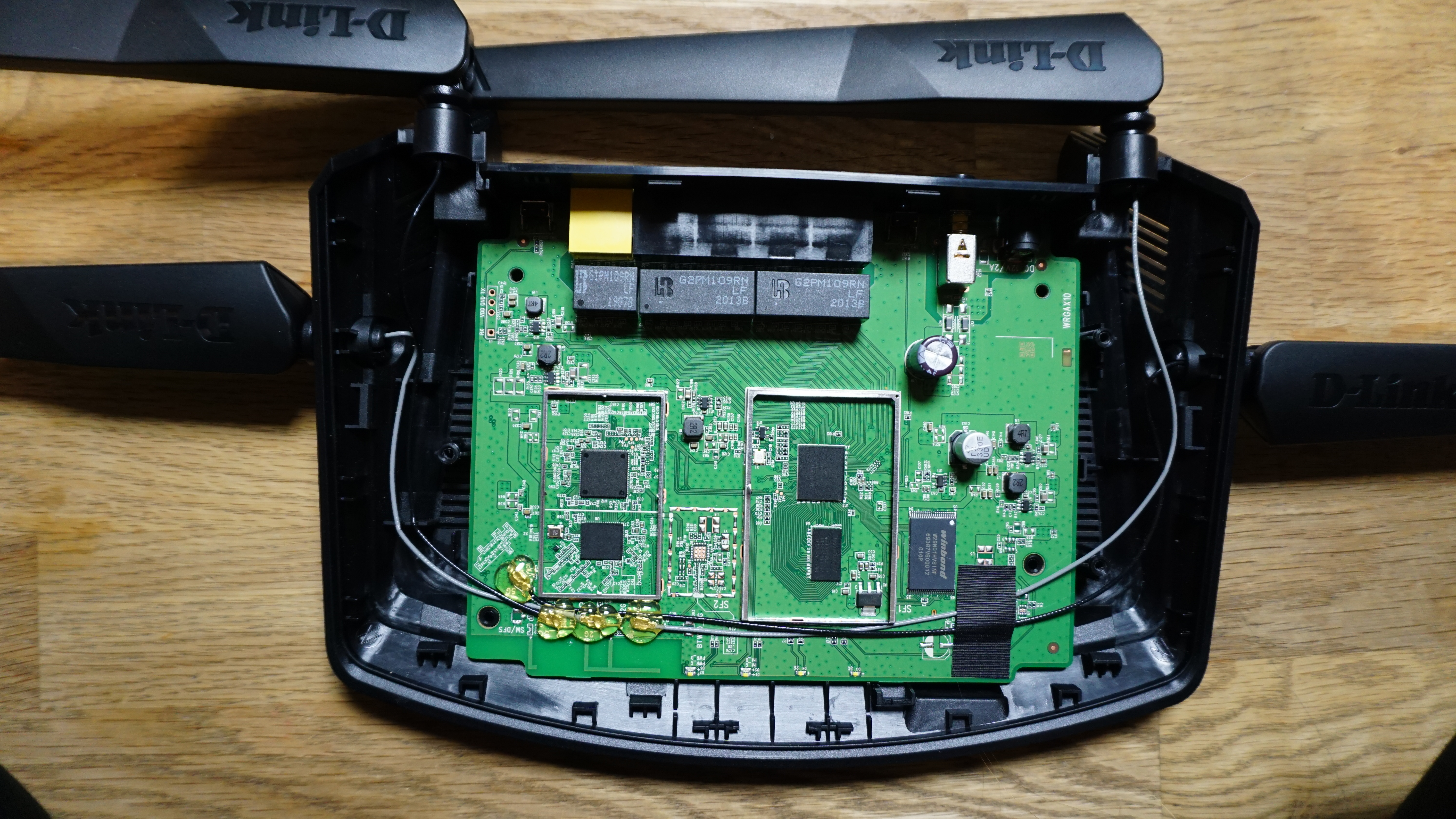

A friend gave me the his x1 carbon gen1 some time ago. The x1 carbon is little bit different from other Thinkpad because it's a combination of a Thinkpad and a Ultrabook.

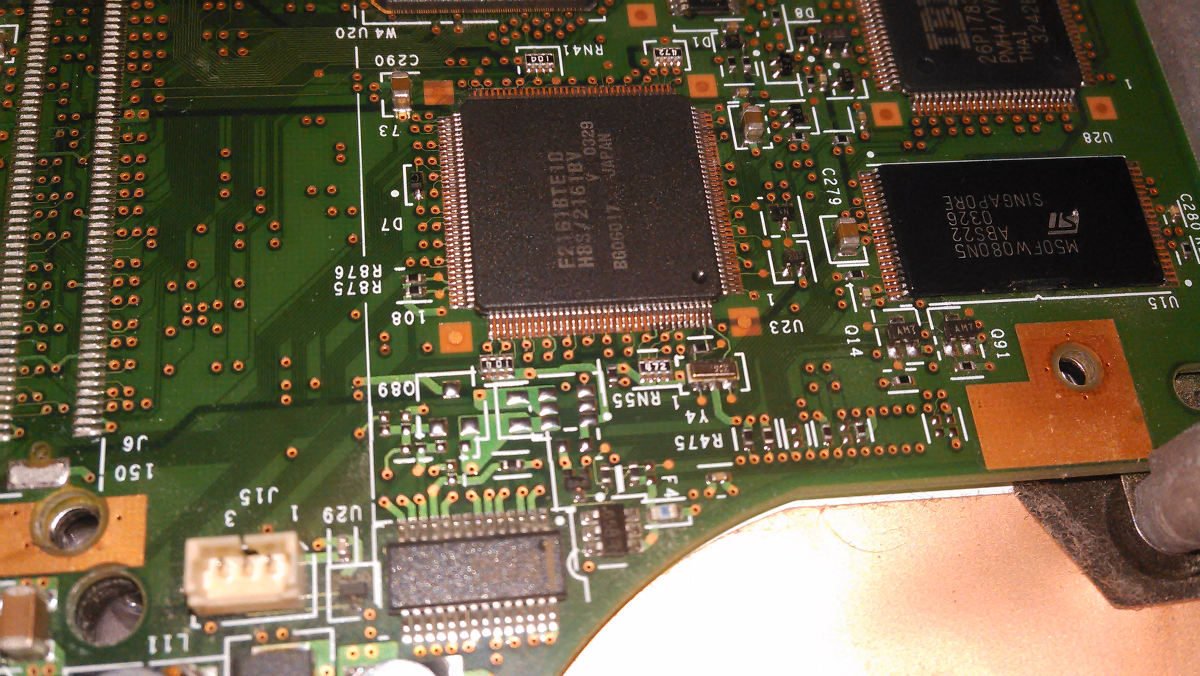

Looking under the hood. The x1 carbon gen1 look very likely as x230.

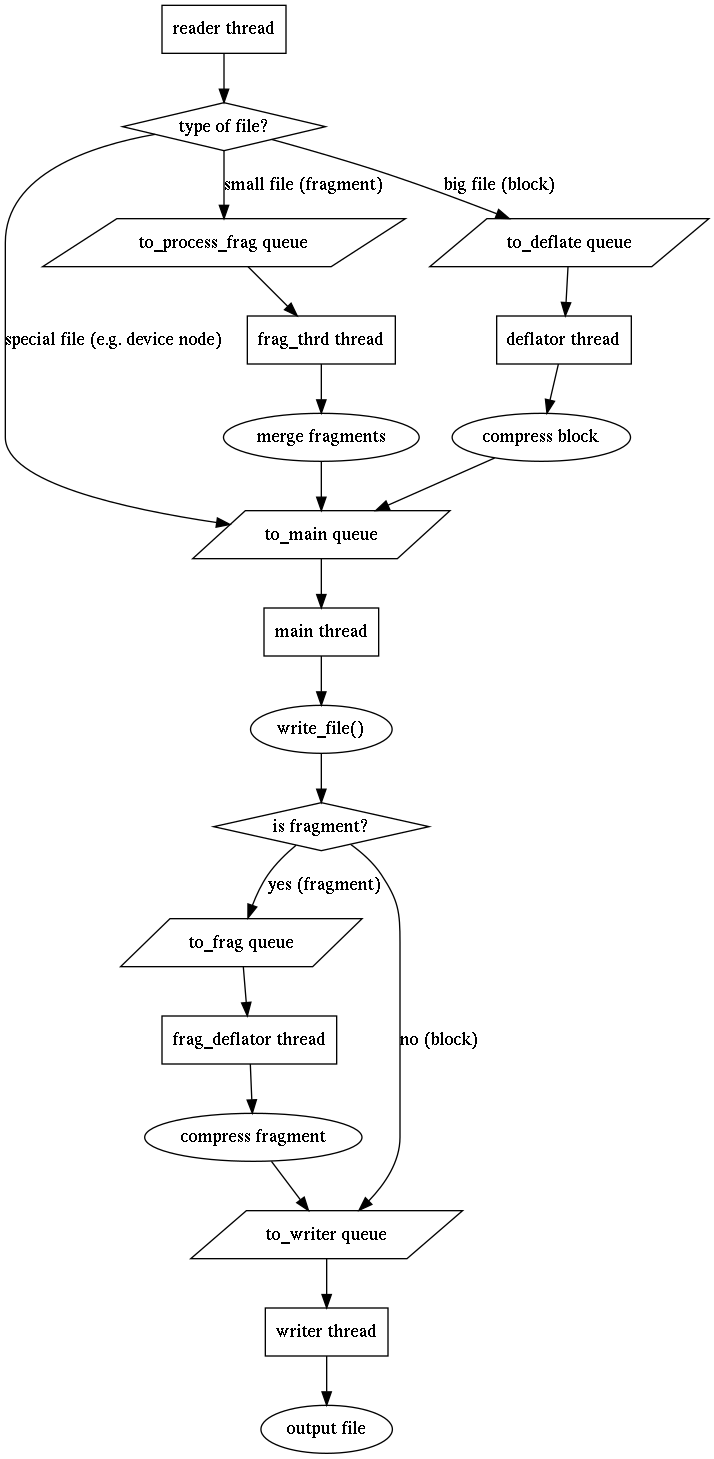

A graphic can describe a thing more than 1000 words. This is how mksquashfs 4.3 works.

This howto will get your through a LEDE to create your own kernel patch using the LEDE infrastructure. It's based on LEDE reboot-1279-gc769c1b.

LEDE has already a lot of patches. They are all applied on one tree. We will create a new patch for lantiq. To get started, let see how LEDE organize the patches. First of all we take a look on /target/linux/*

All of these folders represent a architecture target, except generic. The generic target is used by all targets.

To continue, we need to know which kernel version your target architecture is running on. This is written down into target/linux/lantiq/Makefile.

We're running a 4.4.Y kernel. The Y is written down into /include/kernel-version.mk. We will use .15.

Ok. Now let's see. When LEDE is preparing the kernel build directory, it search for a matching patch directory.

But which is the right patches directory? It use the following make snippet from /include/kernel.mk

Meaning it will use /patches-4.4 if exists or if not try to use /patches.

Now we know how patches are applied to the linux kernel tree. We could go into the directory, create a new patches directory and use quilt...

Or we use the quilt target for that.

make target/linux/clean -> to clean up the old directory. Now make target/linux/prepare QUILT=1 will unpack the source, copy all present patches into ./patches and use quilt to apply.

With quilt you can move forwards and backwards between patches, aswell as modifying those. cd ./build_dir/target-mips_34kc+dsp_uClibc-0.9.33.2/linux-lantiq/linux-4.5.15/ to switch into the linux directory.

Your modification is under ./build_dir/../linux-4.4.15/patches/platform/. With make target/linux/refresh it will refresh all patches and copy them to the correct folder under target/linux/*/patches.

The TP-Link CPE510, a nice outdoor device, got a bad rx behaviour when using it with LEDE. I want to give a short overview how to debug those problems. It could also help you finding problems when facing ath9k pci cards.

To get down to the device. The CPE510 based on a AR9344 SoC. The integrated wireless part is supported by the ath9k driver. To get more knowledge about the AR9344 you should take a look into the public available datasheet. (google for ar9344 datasheet ;)

The AR9344 supports using GPIOs for special purposes it's called a GPIO function. If the function is enabled, the gpio is internally routed to the special purpose. Now the simple part comes if you know which register to look into, just look into it.

After reading the pages 52/53 of the datasheet, it's clear that it can route everything signal to every gpio. Remember the table, because it explains what value means what it's routed to the gpio. We suggest LNA are missing because the receiving part of the CPE510 is bad. So the value 46 and 47 are the important ones, 46 LNA Chain 0, 47 LNA Chain 1. LNA stands for low noice amplifier.

Now we know how the GPIOs works, let's find the register controlling the GPIO function. The GPIO section start at 130, but the interesting part is the GPIO IO Function 0 register at address 0x1804002c. It give you 8 bit to describe it's function, if it's 0x0 no function is selected and the GPIO is used as normal output. So if you write 46 into the bit 0-7 you set the GPIO to become the LNA Chain 0 signal. Every GPIO from GPIO0 to GPIO19 can configured using those register.

We know what registers are interesting (0x1804002c - 0x1804003c). We know which values are interesting (decimal 46 and decimal 47).

But how can we read out those value from a running system? First answer I hear is JTAG, but JTAG isn't easy to archive and more difficult to use on ar71xx, because the bootloader usally deactivate JTAG as one of the first commands.

But we can ask the kernel. /dev/mem is quite usefull for that. It's a direct way to the memory, very dangerous, but also handy ;). The easiest way to interface with /dev/mem is the simple utility called devmem or devmem2. To compile a compatible devmem2 you should use the GPL sources of the firmware, but you can also download the binary from here [1].

Copy devmem2 to /tmp via scp and start reading the values. Because mips is a 32bit architecture we have to read the register

Back to our LNA value. 46 and 47. In hex are those 0x2E and 0x2F. We have to look for those values aligned to 8bit.

# ./devmem2 0x1804002c /dev/mem opened. Memory mapped at address 0x2aaae000. Value at address 0x1804002C (0x2aaae02c): 0x0 # ./devmem2 0x18040030 /dev/mem opened. Memory mapped at address 0x2aaae000. Value at address 0x18040030 (0x2aaae030): 0xB0A0900 # ./devmem2 0x18040034 /dev/mem opened. Memory mapped at address 0x2aaae000. Value at address 0x18040034 (0x2aaae034): 0x2D180000 # ./devmem2 0x18040038 /dev/mem opened. Memory mapped at address 0x2aaae000. Value at address 0x18040038 (0x2aaae038): 0x2C # ./devmem2 0x1804003c /dev/mem opened. Memory mapped at address 0x2aaae000. Value at address 0x1804003C (0x2aaae03c): 0x2F2E0000 #

Found it in 0x1804003C. LNA 0 is GPIO 18 and LNA1 is GPIO 19.

Since long time ago I was inspired of the features of LAVA (Linaro Automated Validation). Lava was developed by Linaro to do automatic test on real hardware. It's written in python and based on a lot small daemons and one django application. It's scheduling submitted tests on hardware depending on the rights and availability. Setting up an own instance isn't so hard, there is an video howto. But Lava is changing it's basic device model to pipeline devices to make it more flexible because the old device model was quite limited. Our instance is available under https://lava.coreboot.org. Atm. there is only one device (x60) and we're looking for help to add more devices.

coreboot is under heavy development around 200 commits a month. Sometime breaks, most time because somebody refactored code and made it simpler. There are many devices supported by coreboot, but commits aren't tested on every hardware. Which means it broke on some hardware. And here the bisect loop begins.

Lava is the perfect place to do bisecting. You can submit a Testjob via commandline, track Job and wait until it's done. Lava itself takes cares that a job doesn't take to long. To break down the task into smaller peaces:

- checkout a revision

- compile coreboot

- copy artefact somewhere where Lava can access it (http-server)

- submit a lava testjob

- lava deploys your image and do some tests

git-bisect does the binary search for the broken revision, checks out the next commit which needs to be tested. But somebody have to tell git-bisect if this is a good or bad revision. Or you use git bisect run. git bisect run a small script and uses the return code to know if this is a good or bad revision. There is also a third command skip, to skip the revision if the compilation fails. git-bisect would do the full bisect job, but to use lava, it needs a Lava Test Job. Under https://github.com/lynxis/coreboot-lava-bisect is my x60 bisect script together with a Lava Test Job for the x60. It only checks if coreboot is booting. But you might want to test something else. Is the cdrom is showing up? Is the wifi card properly detected? Checkout the lava documentation for more information about how to write a Lava Testjob or a Lava Test.

To communicate with Lava on the shell you need to have lava-tool running on your workstation. See https://validation.linaro.org/static/docs/overview.html

With lava-tool submit-job $URL job.yml you can submit a job and get the JobId. And check the status of your job with lava-tool job-status $URL $JOBID. Depending on the job-status the script must set the exit code. My bisect script for coreboot is https://github.com/lynxis/coreboot-lava-bisect

cd coreboot # CPU make -j$CPU export CPU=4 # your login user name for the lava.coreboot.org # you can also use LAVAURL="https://$LAVAUSER@lava.coreboot.fe80.eu/RPC2" export LAVAUSER=lynxis # used by lava to download the coreboot.rom export COREBOOTURL=https://fe80.eu/bisect/coreboot.rom # used as a target by *scp* export COREBOOT_SCP_URL=lynxis@fe80.eu:/var/www/bisect/coreboot.rom git bisect start git bisect bad <REV> git bisect good <REV> git bisect run /path/to/this/dir/bisect.sh

All roads lead to Rome, but PulseAudio is not far behind! In fact, how the PulseAudio client library determines how to try to connect to the PulseAudio server has no less than 13 different steps. Here they are, in priority order:

1) As an application developer, you can specify a server string in your call to pa_context_connect. If you do that, that’s the server string used, nothing else.

2) If the PULSE_SERVER environment variable is set, that’s the server string used, and nothing else.

3) Next, it goes to X to check if there is an x11 property named PULSE_SERVER. If there is, that’s the server string, nothing else. (There is also a PulseAudio module called module-x11-publish that sets this property. It is loaded by the start-pulseaudio-x11 script.)

4) It also checks client.conf, if such a file is found, for the default-server key. If that’s present, that’s the server string.

So, if none of the four methods above gives any result, several items will be merged and tried in order.

First up is trying to connect to a user-level PulseAudio, which means finding the right path where the UNIX socket exists. That in turn has several steps, in priority order:

5) If the PULSE_RUNTIME_PATH environment variable is set, that’s the path.

6) Otherwise, if the XDG_RUNTIME_DIR environment variable is set, the path is the “pulse” subdirectory below the directory specified in XDG_RUNTIME_DIR.

7) If not, and the “.pulse” directory exists in the current user’s home directory, that’s the path. (This is for historical reasons – a few years ago PulseAudio switched from “.pulse” to using XDG compliant directories, but ignoring “.pulse” would throw away some settings on upgrade.)

8) Failing that, if XDG_CONFIG_HOME environment variable is set, the path is the “pulse” subdirectory to the directory specified in XDG_CONFIG_HOME.

9) Still no path? Then fall back to using the “.config/pulse” subdirectory below the current user’s home directory.

Okay, so maybe we can connect to the UNIX socket inside that user-level PulseAudio path. But if it does not work, there are still a few more things to try:

10) Using a path of a system-level PulseAudio server. This directory is /var/run/pulse on Ubuntu (and probably most other distributions), or /usr/local/var/run/pulse in case you compiled PulseAudio from source yourself.

11) By checking client.conf for the key “auto-connect-localhost”. If so, also try connecting to tcp4:127.0.0.1…

12) …and tcp6:[::1], too. Of course we cannot leave IPv6-only systems behind.

13) As the last straw of hope, the library checks client.conf for the key “auto-connect-display”. If it’s set, it checks the DISPLAY environment variable, and if it finds a hostname (i e, something before the “:”), then that host will be tried too.

To summarise, first the client library checks for a server string in step 1-4, if there is none, it makes a server string – out of one item from steps 5-9, and then up to four more items from steps 10-13.

And that’s all. If you ever want to customize how you connect to a PulseAudio server, you have a smorgasbord of options to choose from!

This one’s going to be a bit of a long post. You might want to grab a cup of coffee before you jump in!

Over the last few years, I’ve spent some time getting PulseAudio up and running on a few Android-based phones. There was the initial Galaxy Nexus port, a proof-of-concept port of Firefox OS (git) to use PulseAudio instead of AudioFlinger on a Nexus 4, and most recently, a port of Firefox OS to use PulseAudio on the first gen Moto G and last year’s Sony Xperia Z3 Compact (git).

The process so far has been largely manual and painstaking, and I’ve been trying to make that easier. But before I talk about the how of that, let’s see how all this works in the first place.

If you have managed to get by without having to dig into this dark pit, the porting process can be something of an exercise in masochism. More so if you’re in my shoes and don’t have access to any of the documentation for the audio hardware. Hardware vendors and OEMs usually don’t share these specifications unless under NDA, which is hard to set up as someone just hacking on this stuff as an experiment or for fun in their spare time.

Broadly, the task involves looking at how the devices is set up on Android, and then replicating that process using the standard ALSA library, which is what PulseAudio uses (this works because both the Android and generic Linux userspace talk to the same ALSA-based kernel audio drivers).

First, you look at the Android audio HAL code for the device you’re porting, and the corresponding mixer paths XML configuration. Between the two of these, you get a description of how you can configure the hardware to play back audio in various use cases (music, tones, voice calls), and how to route the audio (headphones, headset, speakers, Bluetooth).

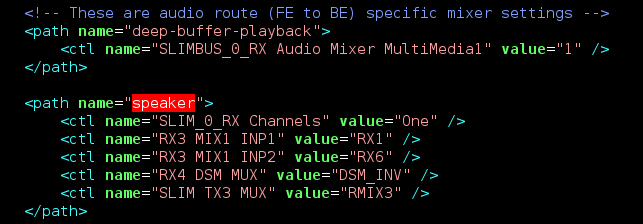

Snippet from mixer paths XML

Snippet from mixer paths XML

In this example, there is one path that describes how to set up the hardware for “deep buffer playback” (used for music, where you can buffer a bunch of data and let the CPU go to sleep). The next path, “speaker”, tells us how to set up the routing to play audio out of the speaker.

These strings are not well-defined, so different hardware uses different path names and combinations to set up the hardware. The XML configuration also does not tell us a number of things, such as what format the hardware supports or what ALSA device to use. All of this information is embedded in the audio HAL code.

Next, you need to translate this configuration into something PulseAudio will understand1. The preferred method for this is ALSA’s UCM, which describes how to set up the hardware for each use case it supports, and how to configure the routing in each of those use cases.

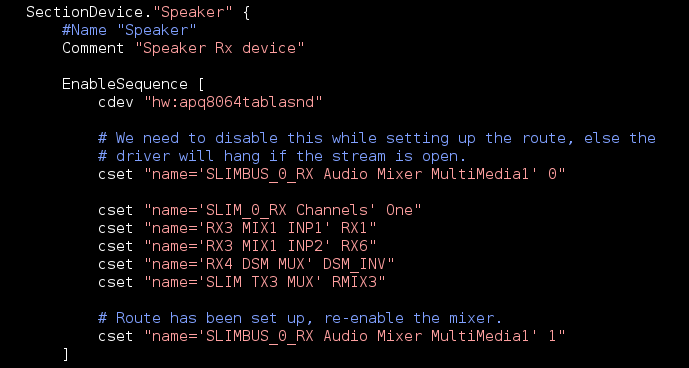

Snippet from UCM

Snippet from UCM

This is a snippet from the “hi-fi” use case, which is the UCM use case roughly corresponding to “deep buffer playback” in the previous section. Within that, we’re looking at the “speaker device” and you can see the same mixer controls as in the previous XML file are toggled. This file does have some additional information — for example, this snippet specifies what ALSA device should be used to toggle mixer controls (“hw:apq8064tablasnd”).

Typically, I start with the “hi-fi” use case — what you would normally use for music playback (and could likely use for tones and such as well). Getting the “phone” use case working is usually much more painful. In addition to setting up the audio hardware similar to th “hi-fi use case, it involves talking to the modem, for which there isn’t a standard method across Android devices. To complicate things, the modem firmware can be extremely sensitive to the order/timing of setup, often with no means of debugging (a.k.a. fun times!).

When there is a new Android version, I need to look at all the changes in the HAL and the XML file, redo the translation to UCM, and then test everything again.

This is clearly repetitive work, and I know I’m not the only one having to do it. Hardware vendors often face the same challenge when supporting the same devices on multiple platforms — Android’s HAL usually uses the XML config I showed above, ChromeOS’s CrAS and PulseAudio use ALSA UCM, Intel uses the parameter framework with its own XML format.

With this background, when I started looking at the Z3 Compact port last year, I decided to write a tool to make this and future ports easier. That tool is creatively named xml2ucm2.

As we saw, the ALSA UCM configuration contains more information than the XML file. It contains a description of the playback and mixer devices to use, as well as some information about configuration (channel count, primarily). This information is usually hardcoded in the audio HAL on Android.

To deal with this, I introduced a small configuration file that provides the additional information required to perform the translation. The idea is that you write this configuration once, and can more or less perform the translation automatically. If the HAL or the XML file changes, it should be easy to implement that as a change in the configuration and just regenerate the UCM files.

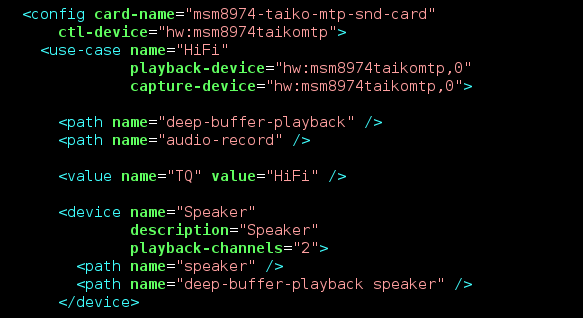

Example xml2ucm configuration

Example xml2ucm configuration

This example shows how the Android XML like in the snippet above can be converted to the corresponding UCM configuration. Once I had the code done, porting all the hi-fi bits on the Xperia Z3 Compact took about 30 minutes. The results of this are available as a more complete example: the mixer paths XML, the config XML, and the generated UCM.

One big missing piece here is voice calls. I spent some time trying to get voice calls working on the two phones I had available to me (the Moto G and the Z3 Compact), but this is quite challenging without access to hardware documentation and I ran out of spare time to devote to the problem. It would be nice to have a complete working example for a device, though.

There are other configuration mechanisms out there — notably Intel’s parameter framework. It would be interesting to add support for that as well. Ideally, the code could be extended to build a complete model of the audio routing/configuration, and generate any of the configuration that is supported.

I’d like this tool to be generally useful, so feel free to post comments and suggestions on Github or just get in touch.

p.s. Thanks go out to Abhinav for all the Haskell help!

Another approach, which the Ubuntu Phone and Jolla SailfishOS folks take, is to just use the Android HAL directly from PulseAudio to set up and use the hardware. This makes sense to quickly enable any arbitrary device (because the HAL provides a hardware-independent interface to do so). In the longer term, I prefer to enable using UCM and alsa-lib directly since it gives us more control, and allows us to use such features as PulseAudio’s dynamic latency adjustment if the hardware allows it. ↩

You might have noticed that the tool is written in Haskell. While this is decidedly not a popular choice of language, it did make for a relatively easy implementation and provides a number of advantages. The unfortunate cost is that most people will find it hard to jump in and start contributing. If you have a feature request or bug fix but are having trouble translating it into code, please do file a bug, and I would happy to help! ↩

Happy 2016 everyone!

While I did mention a while back (almost two years ago, wow) that I was taking a break, I realised recently that I hadn’t posted an update from when I started again.

For the last year and a half, I’ve been providing freelance consulting around PulseAudio, GStreamer, and various other directly and tangentially related projects. There’s a brief list of the kind of work I’ve been involved in.

If you’re looking for help with PulseAudio, GStreamer, multimedia middleware or anything else you might’ve come across on this blog, do get in touch!

2.1 surround sound is (by a very unscientific measure) the third most popular surround speaker setup, after 5.1 and 7.1. Yet, ALSA and PulseAudio has since a long time back supported more unusual setups such as 4.0, 4.1 but not 2.1. It took until 2015 to get all pieces in the stack ready for 2.1 as well.

The problem

So what made adding 2.1 surround more difficult than other setups? Well, first and foremost, because ALSA used to have a fixed mapping of channels. The first six channels were decided to be:

1. Front Left

2. Front Right

3. Rear Left

4. Rear Right

5. Front Center

6. LFE / Subwoofer

Thus, a four channel stream would default to the first four, which would then be a 4.0 stream, and a three channel stream would default to the first three. The only way to send a 2.1 channel stream would then be to send a six channel stream with three channels being silence.

This was not good enough, because some cards, including laptops with internal subwoofers, would only support streaming four channels maximum.

(To add further confusion, it seemed some cards wanted the subwoofer signal on the third channel of four, and others wanted the same signal on the fourth channel of four instead.)

ALSA channel map API

The first part of the solution was a new alsa-lib API for channel mapping, allowing drivers to advertise what channel maps they support, and alsa-lib to expose this information to programs (see snd_pcm_query_chmaps, snd_pcm_get_chmap and snd_pcm_set_chmap).

The second step was for the alsa-lib route plugin to make use of this information. With that, alsa-lib could itself determine whether the hardware was 5.1 or 2.1, and change the number of channels automatically.

PulseAudio bass / treble filter

With the alsa-lib additions, just adding another channel map was easy.

However, there was another problem to deal with. When listening to stereo material, we would like the low frequencies, and only those, to be played back from the subwoofer. These frequencies should also be removed from the other channels. In some cases, the hardware would have a built-in filter to do this for us, so then it was just a matter of setting enable-lfe-remixing in daemon.conf. In other cases, this needed to be done in software.

Therefore, we’ve integrated a crossover filter into PulseAudio. You can configure it by setting lfe-crossover-freq in daemon.conf.

The hardware

If you have a laptop with an internal subwoofer, chances are that it – with all these changes to the stack – still does not work. Because the HDA standard (which is what your laptop very likely uses for analog audio), does not have much of a channel mapping standard either! So vendors might decide to do things differently, which means that every single hardware model might need a patch in the kernel.

If you don’t have an internal subwoofer, but a separate external one, you might be able to use hdajackretask to reconfigure your headphone jack to an “Internal Speaker (LFE)” instead. But the downside of that, is that you then can’t use the jack as a headphone jack…

Do I have it?

In Ubuntu, it’s been working since the 15.04 release (vivid). If you’re not running Ubuntu, you need alsa-lib 1.0.28, PulseAudio 7, and a kernel from, say, mid 2014 or later.

Acknowledgements

Takashi Iwai wrote the channel mapping API, and also provided help and fixes for the alsa-lib route plugin work.

The crossover filter code was imported from CRAS (but after refactoring and cleanup, there was not much left of that code).

Hui Wang helped me write and test the PulseAudio implementation.

PulseAudio upstream developers, especially Alexander Patrakov, did a thorough review of the PulseAudio patch set.

We just rolled out a minor bugfix release. Quick changelog:

More details on the mailing list.

Thanks to everyone who contributed with bug reports and testing. What isn’t generally visible is that a lot of this happens behind the scenes downstream on distribution bug trackers, IRC, and so forth.

I know it’s been ages, but I am now working on updating the webrtc-audio-processing library. You might remember this as the code that we split off from the webrtc.org code to use in the PulseAudio echo cancellation module.

This is basically just the AudioProcessing module, bundled as a standalone library so that we can use the fantastic AEC, AGC, and noise suppression implementation from that code base. For packaging simplicity, I made a copy of the necessary code, and wrote an autotools-based build system around that.

Now since I last copied the code, the library API has changed a bit — nothing drastic, just a few minor cleanups and removed API. This wouldn’t normally be a big deal since this code isn’t actually published as external API — it’s mostly embedded in the Chromium and Firefox trees, probably other projects too.

Since we are exposing a copy of this code as a standalone library, this means that there are two options — we could (a) just break the API, and all dependent code needs to be updated to be able to use the new version, or (b) write a small wrapper to try to maintain backwards compatibility.

I’m inclined to just break API and release a new version of the library which is not backwards compatible. My rationale for this is that I’d like to keep the code as close to what is upstream as possible, and over time it could become painful to maintain a bunch of backwards-compatibility code.

A nicer solution would be to work with upstream to make it possible to build the AudioProcessing module as a standalone library. While the folks upstream seemed amenable to the idea when this came up a few years ago, nobody has stepped up to actually do the work for this. In the mean time, a number of interesting features have been added to the module, and it would be good to pull this in to use in PulseAudio and any other projects using this code (more about this in a follow-up post).

So if you’re using webrtc-audio-processing, be warned that the next release will probably break API, and you’ll need to update your code. I’ll try to publish a quick update guide when releasing the code, but if you want to look at the current API, take a look at the current audio_processing.h.

p.s.: If you do use webrtc-audio-processing as a dependency, I’d love to hear about it. As far as I know, PulseAudio is the only user of this library at the moment.

This one’s a bit late, for reasons that’ll be clear enough later in this post. I had the happy opportunity to go to GUADEC in Gothenburg this year (after missing the last two, unfortunately). It was a great, well-organised event, and I felt super-charged again, meeting all the people making GNOME better every day.

GUADEC picnic @ Gothenberg

GUADEC picnic @ Gothenberg

I presented a status update of what we’ve been up to in the PulseAudio world in the past few years. Amazingly, all the videos are up already, so you can catch up with anything that you might have missed here.

We also had a meeting of PulseAudio developers which and a number of interesting topics of discussion came up (I’ll try to summarise my notes in a separate post).

A bunch of other interesting discussions happened in the hallways, and I’ll write about that if my investigations take me some place interesting.

Now the downside — I ended up missing the BoF part of GUADEC, and all of the GStreamer hackfest in Montpellier after. As it happens, I contracted dengue and I’m still recovering from this. Fortunately it was the lesser (non-haemorrhagic) version without any complications, so now it’s just a matter of resting till I’ve recuperated completely.

Nevertheless, the first part of the trip was great, and I’d like to thank the GNOME Foundation for sponsoring my travel and stay, without which I would have missed out on all the GUADEC fun this year.

Sponsored by GNOME!

Sponsored by GNOME!

I was in Depok, Indonesia last week to speak at GNOME Asia 2015. It was a great experience — the organisers did a fantastic job and as a bonus, the venue was incredibly pretty!

View from our room

View from our room

My talk was about the GNOME audio stack, and my original intention was to talk a bit about the APIs, how to use them, and how to choose which to use. After the first day, though, I felt like a more high-level view of the pieces would be more useful to the audience, so I adjusted the focus a bit. My slides are up here.

Nirbheek and I then spent a couple of days going down to Yogyakarta to cycle around, visit some temples, and sip some fine hipster coffee.

All in all, it was a week well spent. I’d like to thank the GNOME Foundation for helping me get to the conference!

Sponsored by GNOME!

Sponsored by GNOME!

Some friends give me their old hardware to help me on my next project: Create an open source embedded controller firmware for Lenovo Thinkpads. My target platform are all Lenovo Thinkpads using a Renesas/Hitachi H8S as EC. These chips built into Thinkpads from very old one to new models like the X230.

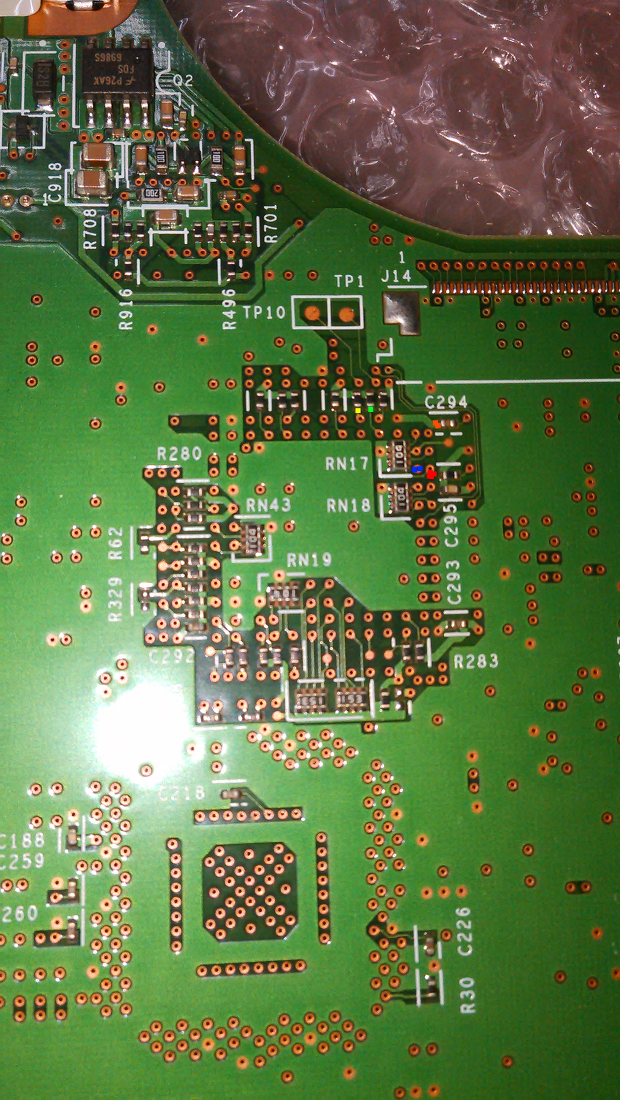

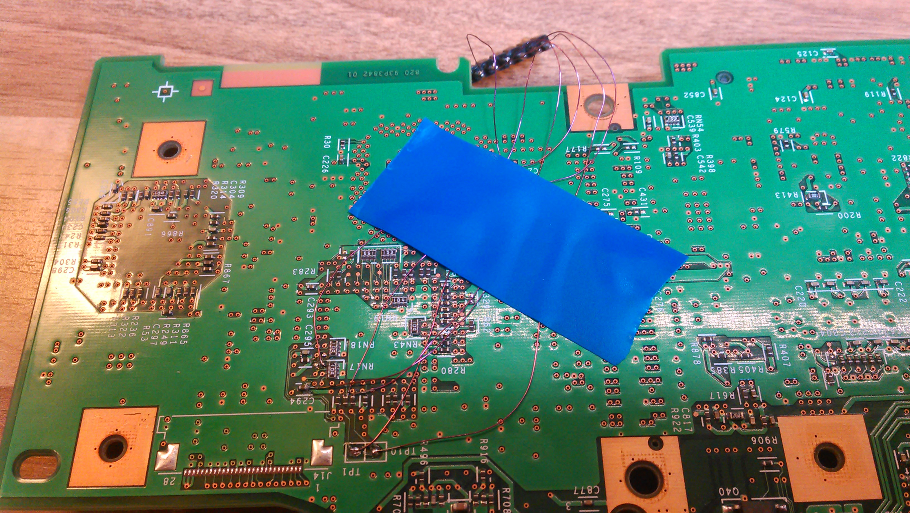

So what can be better that using a very old laptop like a T40 to solder and do hardware mods? The first step is flashing the chip independent from the Operating System running on the hardware. Why that? Because the EC controls certain power regulators and take care of turning the mainboard on. A broken EC would allow you to turn your Laptop on. The H8S supports different boot modes. It can boot normal advance boot. The boot mode is a special mode for developing and flashing. It receive their firmware over normal UART and execute it. The H8S defines it boot mode over 2 pins - MD0 and MD1 - also named mode0 and mode1. After looking on the schematics (which is available on the internet) we need to solder the UART pin RX, TX, MD1, GND. MD0 isn't required, because it's already set to 0. I soldered the test pin TP1, TP10 (I2C). /RES was soldered too, because I miscounted the pins. /RES lies beside of MD1. But it can be useful later. I colored the pins on the bottom picture.

| color | pin |

| Red | MD1 |

| Orange | GND |

| Blue | /RES |

| Green | RX |

| Yellow | TX |

The soldered image is missing MD1.

I got the task to setup LACP witch an not so new 3com siwtch on one side and a Debian Jessie + OpenVSwitch on the other. Usally not a so big problem. Just keep in mind, 3com was bought by HP and is still using 3com software in their newer products. It's even possible to update an 3com switch with HP firmware if you're lucky to know which HP product matches your HP switch. Back to the task: setup LACP. I've done everything mentioned in the manual: Check all ports are the same media type All ports must be configured in the same way * have the same LACP priority

Everything seems be ok. The documentation also say that all Ports will loose their individual port conifiguration when added. On the switch side I can see the switch is showing my Linux Box as port partner, but still the link group isn't going into 'Active' state. Still showing 'The port is not configured properly.'. An update is not a option from remote, to much service depends on this switch. Let's take a closer look on the VLAN configuration. The LACP group isn't configured yet for any VLAN. But the ports still have an old configuration with tagged VLAN and is in hybrid mode? Why? It has a PVID configured, but not an untagged vlan asigned. Looks strange.

Go to VLAN -> Modify Port: Select both LACP ports as well as the LACP group and set them into Trunk mode without any VLAN. Now the LACP changed to active. Maybe this changed in newer HP firmware versions.

This is a technical post about PulseAudio internals and the upcoming protocol improvements in the upcoming PulseAudio 6.0 release.

PulseAudio is said to have a “zero-copy” architecture. So let’s look at what copies and buffers are involved in a typical playback scenario.

When PulseAudio server and client runs as the same user, PulseAudio enables shared memory (SHM) for audio data. (In other cases, SHM is disabled for security reasons.) Applications can use pa_stream_begin_write to get a pointer directly into the SHM buffer. When using pa_stream_write or through the ALSA plugin, there will be one memory copy into the SHM.

On the server side, the server might need to convert the stream into a format that fits the hardware (and potential other streams that might be running simultaneously). This step is skipped if deemed unnecessary.

First, the samples are converted to either signed 16 bit or float 32 bit (mainly depending on resampler requirements).

In case resampling is necessary, we make use of external resampler libraries for this, the default being speex.

Second, if remapping is necessary, e g if the input is mono and the output is stereo, that is performed as well. Finally, the samples are converted to a format that the hardware supports.

So, in worst case, there might be up to four different buffers involved here (first: after converting to “work format”, second: after resampling, third: after remapping, fourth: after converting to hardware supported format), and in best case, this step is entirely skipped.

PulseAudio’s built in mixer multiplies each channel of each stream with a volume factor and writes the result to the hardware. In case the hardware supports mmap (memory mapping), we write the mix result directly into the DMA buffers.

The best we can do is one copy in total, from the SHM buffer directly into the DMA hardware buffer. I hope this clears up any confusion about what PulseAudio’s advertised “zero copy” capabilities means in practice.

However, memory copies is not the only thing you want to avoid to get good performance, which brings us to the next point:

PulseAudio does pretty well CPU wise for high latency loads (e g music playback), but a bit worse for low latency loads (e g VOIP, gaming). Or to put it another way, PulseAudio has a low per sample cost, but there is still some optimisation that can be done per packet.

For every playback packet, there are three messages sent: from server to client saying “I need more data”, from client to server saying “here’s some data, I put it in SHM, at this address”, and then a third from server to client saying “thanks, I have no more use for this SHM data, please reclaim the memory”. The third message is not sent until the audio has actually been played back.

For every message, it means syscalls to write, read, and poll a unix socket. This overhead turned out to be significant enough to try to improve.

So instead of putting just the audio data into SHM, as of 6.0 we also put the messages into two SHM ringbuffers, one in each direction. For signalling we use eventfds. (There is also an optimisation layer on top of the eventfd that tries to avoid writing to the eventfd in case no one is currently waiting.) This is not so much for saving memory copies but to save syscalls.

From my own unscientific benchmarks (i e, running “top”), this saves us ~10% – 25% of CPU power in low latency use cases, half of that being on the client side.

The third week of October was quite action-packed, with a whole bunch of conferences happening in Düsseldorf. The Linux audio developer community as well as the PulseAudio developers each had a whole day of discussions related to a wide range of topics. I’ll be summarising the events of the PulseAudio mini summit day here. The discussion was split into two parts, the first half of the day with just the current core developers and the latter half with members of the community participating as well.

I’d like to thank the Linux Foundation for sparing us a room to carry out these discussions — it’s fantastic that we are able to colocate such meetings with a bunch of other conferences, making it much easier than it would otherwise be for all of us to converge to a single place, hash out ideas, and generally have a good time in real life as well!

Happy faces — incontrovertible proof that everyone loves PulseAudio!

Happy faces — incontrovertible proof that everyone loves PulseAudio!

With a whole day of discussions, this is clearly going to be a long post, so you might want to grab a coffee now. :)

We have a few blockers for 6.0, and some pending patches to merge (mainly HSP support). Once this is done, we can proceed to our standard freeze → release candidate → stable process.

For simplifying packaging, it would be nice to be able to build all the available BlueZ module backends in one shot. There wasn’t much opposition to this idea, and David (Henningsson) said he might look at this. (as I update this before posting, he already has)

We briefly discussed plans around the recently introduced shared ringbuffer channel code for communication between PulseAudio clients and the server. We talked about the performance benefits, and future plans such as direct communication between the client and server-side I/O threads.

Tanu (Kaskinen) has a long-standing set of patches to add a generic routing framework to PulseAudio, developed by notably Jaska Uimonen, Janos Kovacs, and other members of the Tizen IVI team. This work adds a set of new concepts that we’ve not been entirely comfortable merging into the core. To unblock these patches, it was agreed that doing this work in a module and using a protocol extension API would be more beneficial. (Tanu later did a demo of the CLI extensions that have been made for the new routing concepts)

As a consequence of the discussion around the routing framework, David mentioned that he’d like to take forward Colin’s priority list work in the mean time. Based on our discussions, it looked like it would be possible to extend module-device-manager to make it port aware and get the kind functionality we want (the ability to have a priority-order list of devices). David was to look into this.

Relatedly, we discussed the need to export the PA internal headers to allow externally built modules. We agreed that this would be okay to have if it was made abundantly clear that this API would have absolutely no stability guarantees, and is mostly meant to simplify packaging for specialised distributions.

Which led us to the other bit of infrastructure required to write modules more easily — making our protocol extension mechanism more generic. Currently, we have a static list of protocol extensions in our core. Changing this requires exposing our pa_tagstruct structure as public API, which we haven’t done. If we don’t want to do that, then we would expose a generic “throw this blob across the protocol” mechanism and leave it to the module/library to take care of marshalling/unmarshalling.

Alexander shared a number of his findings about resampler quality on PulseAudio, vs. those found on Windows and Mac OS. Some questions were asked about other parameters, such as relative CPU consumption, etc. There was also some discussion on how to try to carry this work to a conclusion, but no clear answer emerged.

It was also agreed on the basis of this work that support for libsamplerate and ffmpeg could be phased out after deprecation.

The discussion came around to the possibility of having a mode where (if the hardware supports it), PulseAudio just plays out samples without resampling, conversion, etc. This has been brought up in the past for “audiophile” use cases where the card supports 88.2/96 kHZ and higher sample rates.

No objections were raised to having such a mode — I’d like to take this up at some point of time.

Alexander has some code for filtering low frequencies for the LFE channel, currently as a virtual sink, that could eventually be integrated into the core.

David raised a question about the current status of rtkit and whether it needs to exist, and if so, where. Lennart brought up the fact that rtkit currently does not work on systemd+cgroups based setups (I don’t seem to have why in my notes, and I don’t recall off the top of my head).

The conclusion of the discussion was that some alternate policy method for deciding RT privileges, possibly within systemd, would be needed, but for now rtkit should be used (and fixed!)

Discussions came up about the possibility of using kdbus and/or memfd for the PulseAudio transport. This is interesting to me, there doesn’t seem to be an immediately clear benefit over our SHM mechanism in terms of performance, and some work to evaluate how this could be used, and what the benefit would be, needs to be done.

David has now submitted patches for controls that affect multiple outputs (such as “Headphone+LO”). These are currently being discussed.

Tanu would like to add code to support collecting audio streams into “audio groups” to apply collective policy to them. I am supposed to help review this, and Colin mentioned that module-stream-restore already uses similar concepts.

Tanu proposed the addition of new objects to represent streams and objects. There didn’t seem to be consensus on adding these, but there was agreement of a clear need to consolidate common code from sink-input/source-output and sink/source implementations. The idea was that having a common parent object for each pair might be one way to do this. I volunteered to help with this if someone’s taking it up.

Alexander brough up the need for a filter API in PulseAudio, and this is something I really would like to have. I am supposed to sketch out an API (though implementing this is non-trivial and will likely take time).

David plans to see if we can use profile availability to help determine when an HDMI device is actually available.

The usability of flat-volumes for browser use cases (where the volume of streams can be controlled programmatically) was discussed, and my patch to allow optional opt-out by a stream from participating in flat volumes came up. Tanu and I are to continue the discussion already on the mailing list to come up with a solution for this.

Alexander raised concerns about the quality of rewinding code in some of our filter modules. The agreement was that we needed better documentation on handling rewinds, including how to explicitly not allow rewinds in a sink. The example virtual sink/source code also needs to be adjusted accordingly.

Wim Taymans’ work on adding back HSP support to PulseAudio came up. Since the meeting, I’ve reviewed and merged this code with the change we want. Speaking to Luiz Augusto von Dentz from the BlueZ side, something we should also be able to add back is for PulseAudio to act as an HSP headset (using the same approach as for HSP gateway support).

Takashi Iwai raised a question about what a good way to run PA in a container was. The suggestion was that a tunnel sink would likely be the best approach.

Based on discussion from the previous day at the Linux Audio mini-summit, I’m supposed to look at the possibility of consolidating the various mixer configuration formats we currently have to deal with (primarily UCM and its implementations, and Android’s XML format).

(thanks to Tanu, David and Peter for reviewing this)

There are lot of howtos written for this topic but most won't worked for me. Irky's howto is very good. I'm mirroring his files here and summarise his howto. If you have any question look into his article.

The dockstar is special device, because it does not support serial boot like other Marvel kirkwood devices. E.g. when you bricked a Seagate GoFlex you can recover it without jtag over serial boot. What is serial boot? Recover without jtag

This howto is for the Seagate Dockstar tested on archlinux with openocd 0.8!

You need only a buspirate with a jtag firmware, no serial is needed here.

openocd -f dockstar.cfgsheevaplug_initnand probe 0nand erase 0 0x0 0xa0000nand write 0 uboot.j.kwb 0 oob_softecc_kwHeadsets come in many sorts and shapes. And laptops come with different sorts of headset jacks – there is the classic variant of one 3.5 mm headphone jack and one 3.5 mm mic jack, and the newer (common on smartphones) 3.5 mm headset jack which can do both. USB and Bluetooth headsets are also quite common, but that’s outside the scope for this article, which is about different types of 3.5 mm (1/8 inch) jacks and how we support them in Ubuntu 14.04.

You’d think this would be simple to support, and for the classic (and still common) version of having one headphone jack and one mic jack that’s mostly true, but newer hardware come in several variants.

If we talk about the typical TRRS headset – for the headset itself there are two competing standards, CTIA and OMTP. CTIA is the more common variant, at least in the US and Europe, but it means that we have laptop jacks supporting only one of the variants, or both by autodetecting which sort has been plugged in.

Speaking of autodetection, hardware differs there as well. Some computers can autodetect whether a headphone or a headset has been plugged in, whereas others can not. Some computers also have a “mic in” mode, so they would have only one jack, but you can manually retask it to be a microphone input.

Finally, a few netbooks have one 3.5 mm TRS jack where you can plug in either a headphone or a mic but not a headset.

So, how would you know which sort of headset jack(s) you have on your device? Well, I found the most reliable source is to actually look at the small icon present next to the jack. Does it look like a headphone (without mic), headset (with mic) or a microphone? If there are two icons separated by a slash “/”, it means “either or”.

For the jacks where the hardware cannot autodetect what has been plugged in, the user needs to do this manually. In Ubuntu 14.04, we now have a dialog:

In previous versions of Ubuntu, you would have to go to the sound settings dialog and make sure the correct input and output were selected. So still solvable, just a few more clicks. (The dialog might also be present in some Ubuntu preinstalls running Ubuntu 12.04.)

So in userspace, we should be all set. Now let’s talk about kernels and individual devices.

Quite common with Dell machines manufactured in the last year or so, is the version where the hardware can’t distinguish between headphones and headsets. These machines need to be quirked in the kernel, which means that for every new model, somebody has to insert a row in a table inside the kernel. Without that quirk, the jack will work, but with headphones only.

So if your Dell machine is one of these and not currently supporting headset microphones in Ubuntu 14.04, here’s what you can do:

Notes for people not running Ubuntu

Four years and what seems like a lifetime ago, I jumped aboard the ship Collabora Multimedia, and set sail for adventure and lands unknown. We sailed through strange new seas, to exotic lands, defeated many monsters, and, I feel, had some positive impact on the world around us. Last Friday, on my request, I got dropped back at the shore.

I’ve had an insanely fun time at Collabora, working with absurdly talented and dedicated people. Nevertheless, I’ve come to the point where I feel like I need something of a break. I’m not sure what’s next, other than a month or two of rest and relaxation — reading, cycling, travel, and catching up with some of the things I’ve been promising to do if only I had more time. Yes, that includes PulseAudio and GStreamer hacking as well. :-)

And there’ll be more updates and blog posts too!

The Sailfish xmpp client integrates perfect into your contacts, but doesn't work with your own jabber server as long you are using self-signed certificate. This is because SailfishOS even checks per default the server certificate, which is great! But there is no GUI to add you own CA or certificate, so you don't get any connection to an server which uses an unknown certificate. IMHO commercial Certificate Authority are broken by design, but that's another story :). The usual answer for a working jabber is disable the certificate check for your xmpp. You are disabling every feature of ssl (which is not much as long over hundrets of CA are in your chain). With checking you have a little bit more secury than nothing. As alternative you can add the certificates to the telepathy chain. The jabber client (telepathy-gabble) have an additional folder of certificates. telepathy add certificates from /home/nemo/.config/telepathy/certs/ before loading all certificates from /etc/pki/tls/certs/ca-bundle.crt. Just put it into that folder.

# mkdir -p /home/nemo/.config/telepathy/certs/

# cp /home/nemo/Downloads/jabber-ca_or_cert.crt /home/nemo/.config/telepathy/certs/

I've re-added my jabber account to make the certificate work. :Maybe a reboot will apply your certificate as well.

|

| ~100 samples of a 440Hz sine wave |

The smartwatch 2 is sony's new smartwatch. I got one from a hackerthon in Berlin, where everybody who attended got one from Sony. I liked to open it, but I'm a litte bit scared broking it's waterproofness. So I'll wait for ifixit or other disassembly website. Still I'm interested what's inside and how does it works?

The software side heavily depending on your android phone. There are some applications running direct on the watch. Like alarm clock, settings, countdown. Everything else is running on your phone and using it's display as canvas. Yes, you are writing over bluetooth into a canvas. When you are clicking on your watch, the Sony Smartwatch app on your phone is sending an broadcast intent which is starting your app. But that is a service in the background. For more information look into ligi's github repo.

Now get into the firmware and hardware. As Sony already written for the smartwatch (1) you can easy access the dfu bootloader. It's a dfu compatable bootloader. As already described on Sony's hacker documentation

dfu-util -l will show you when it's connected.

You can read and write it. Let's look into it.

dfu-util -l

Found DFU: [0fce:f0fa] devnum=0, cfg=1, intf=0, alt=0, name="@Internal Flash /0x08000000/03*016Kg,01*016Kg,01*064Kg,07*128Kg,03*016Kg,01*016Kg,01*064Kg,07*128Kg"

Found DFU: [0fce:f0fa] devnum=0, cfg=1, intf=0, alt=1, name="@eMMC /0x00000000/01*512Mg"

I think all firmware is placed within the first flash. It's the internal one. An eMMC is an soldered sdcard. Because we have the same partition layout twice, I think this is used for OTA firmware update. It's a common case on embedded device. Double size your flash and split it into 2 identical parts. I'll name the first part A and part B is the other half. We are booting part A, now an OTA update comes, the firmware will write this into part B and tell the bootloader: 'Try the B partition but only once.' Now the device will reboot and the bootloader boot part B. When the new firmware successful booted it tells the bootloader 'Boot everytime B now'. If the new firmware fails in B, the device has to be reboot and falling back to boot part A. This can be done by a hardware watchdog automatically.

I believe on the emmc we'll find all additional icons and application name we installed over the android. Remember the smartwatch is only a remote display with sensors. Nothing more.

Let's disassemble the firmware. Put your device into dfu mode. dfu has also upload capabilities. Upload means from device to a file. Download is for firmware flashing. Upload the 2M flash into a file.

dfu-util -a 0 -U /tmp/smartwatch_internal -s 0x08000000:2097152

Now let's do a simple strings /tmp/smartwatch_internal.

As we know from the settings menu we have an STM32F42x, a Cortex M4.

Look at the filenames.

Technical Details:

type: chip [interfaces] (detailed description)

CPU: STM32F4xx (STM32F-427 or 429)

RAM: 256k

ROM/flash: 2M

eMMC: 512M? [connected over sdio] (maybe less I could only load 128M over dfu-util. Could be also a edge in dfu-util)

BT: STLC2690 (low power but only BT 3.0. I guess low power means not BT4.0 low power. But it seems to has an own cortex m3 + fw upgrade functionality)

Accelerometer: bma250 [SPI (4-wire, 3-wire), i2c, 2 interrupt pins] (Bosch Sensoric)

Compass: ak8963 [SPI, I2C] (really? sony didn't exposed it or is this just a leftover from a debug board?)

NFC-tag: ntag203f [interupt pin for rf-activity] (only a tag - the mcu only knows if it got read)

Touch: ttsp3 (i'm not sure if this is a Cypress TTSP with i2c)

LiIonController: Max17048 [i2c] (fuel gauge and battery controller)

Light/AmbientSensor: Taos TSL2771 [I2C]

RTC: included CPU

Display: TC358763XBG [SPI (3,4 wire), SSI for display data; i2c, spi, pwm for control] (Display Controller)

Buzzer: GPIO wired

Most of the sensors and actors are already supported by linux kernel because they are built in some (Sony) smartphones. No I don't want run linux on it. But we have already working driver which we can adapt for an alternative firmware.

Search or github' search for STM32F4xx_StdPeriph_Driver which is freely available SDK. I think they don't written a complete firmware from scratch. Because of some strings I guess it's a 'uCos' extended to their needs.

Sony please provide us with the correct wiring of the sensors and actors so we can build our own firmware?!

Up until now, we’ve been using Android’s AudioFlinger for playing back and recording audio. Starting with tomorrow’s image, that is no longer true. Instead we’re talking directly from PulseAudio to ALSA, or the Android audio HAL when necessary.

In short, here’s how PulseAudio now works:

This provides somewhat of a compromise between features and porting effort: By using the ALSA library whenever we can, we can access PulseAudio’s timer scheduling and dynamic latency features. Having the straightest path possible for playing back music should help efficiency (and in extension, battery life). At least in theory – we haven’t actually done measurements.